Predicting NFL outcomes using deep learning

I’ve started getting into football this season, something that I’ve tried to do on and off for several years. I’m not sure exactly what sparked this renewed interest—I think I’ve generally gained a much deeper appreciation of the sport and have more time and means to catch games. Regardless, my Sundays are now for football.

A few weeks ago, I was watching the 49ers play the Minnesota Vikings. The commentators mentioned that the 49ers hadn’t won at Minnesota since 1992 (I’m sad to report that the streak continues). In another game from that same week, the commentators mentioned that only a small percentage of teams that go 0-2 in their first two games make it to the playoffs. This inspired me to think about how effectively the results of future games could be predicted by the results of past games. I thought it could be a great deep learning project to see if we could predict outcomes, or even score spreads, given each team’s current records.

As usual, the code is here.

Data Preparation

I sourced the data on past game results from here. It consists of 2848 rows with these columns:

Season — 2017 - 2024

Week — Includes regular and postseason games

GameStatus — e.g. final or upcoming

PostSeason — Post or regular season game

Day

Date

AwayTeam

AwayRecord

AwayScore

AwayWin

HomeTeam

HomeRecord

HomeScore

HomeWin

AwaySeeding

HomeSeeding

To prepare the data for modeling, I did a few things:

Drop all non-regular season games (just to keep things simple)

Add a column for “spread”, i.e. the difference between home and away scores

Split the “record” columns into wins, losses, and draws

Dropped these columns: Day, Date, Away and Home Seeding, AwayWin, GameStatus, PostSeason, Season, HomeScore, AwayScore

One-hot encode the team columns

Parse week column into integers

If a team won that week’s game, subtract 1 from the win record. Likewise for if they lost or had a draw. This is because the record actually includes the result of that week’s game, so removing this is HUGELY important to prevent leakage.

By the end of our data munging, our table looks like this!

Task 1: Predicting game winners

We will use a deep neural net with a sigmoid activation function applied on the output layer. First, we need to normalize the data:

X = outcome_df.drop(columns=["HomeWin"]).values.astype(float)

y = outcome_df[["HomeWin"]].values.astype(float)

X_scaled = preprocessing.normalize(X, axis=0)

Y_scaled = preprocessing.normalize(y)And then, we define and train the model. This article was a fantastic reference. Some judicious guessing landed me on 50 hidden layer neurons with 50% dropout (which helps prevent overfitting). We also use Binary Cross-Entropy loss as our loss function and Adam optimizer to train the model.

# train the model

outcome_model = nn.Sequential(

nn.Linear(71, 50), nn.ReLU(), nn.Dropout(0.5), nn.Linear(50, 1), nn.Sigmoid()

)

loss_fn = nn.BCELoss()

optimizer = optim.Adam(outcome_model.parameters(), lr=0.001)

n_epochs = 500

batch_size = 25

last_loss = 0

curr_loss = 10000

epochs = 0

while abs(curr_loss - last_loss) > 1e-6 and epochs < n_epochs:

epochs += 1

last_loss = curr_loss

for i in range(0, len(train_X), batch_size):

Xbatch = train_X[i : i + batch_size]

y_pred = outcome_model(Xbatch)

ybatch = train_y[i : i + batch_size]

curr_loss = loss_fn(y_pred, ybatch)

optimizer.zero_grad()

curr_loss.backward()

optimizer.step()

# print(f"Epoch: {epochs}, Loss: {curr_loss}")

y_pred = outcome_model(test_X)

accuracy = (y_pred.round() == test_y).float().mean()

print(f"Accuracy {accuracy:0.2%}")The best accuracy I got was 60.77% — pretty good. It’s worth noting that home teams in the NFL win their games about 51-57% of the time, so just guessing the home team is a great dummy model to compare against.

Task 2: Predicting score spread

A much harder task is to predict the score spread of the game, which we frame as the difference between the home and away score. I followed more or less this article to build the model. There were a few key differences to Task 1. First, we use Min-Max scaler instead of normalizing the data:

scaler = StandardScaler()

scaler.fit(X_train_raw)

X_train = scaler.transform(X_train_raw)

X_test = scaler.transform(X_test_raw)And then we build a regression model and use MSE loss to train. Again, judicious guessing was our friend (it’s so interesting to me that ML can do all these wonderful things, but the best tool we have for hyperparameter selection seems to be guess-and-check)

# train the model

spread_model = nn.Sequential(

nn.Linear(X.shape[1], 16),

nn.ReLU(),

nn.Linear(16, 8),

nn.ReLU(),

nn.Linear(8, 1),

)

# loss function and optimizer

loss_fn = nn.MSELoss() # mean square error

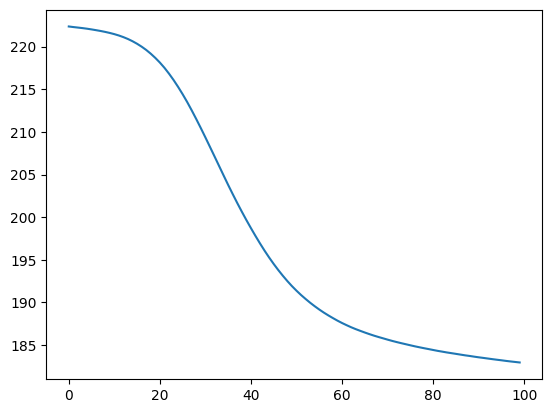

optimizer = optim.Adam(spread_model.parameters(), lr=0.0001)We got a nice validation graph (e.g. MSE on a holdout set of datapoints, computed after each training epoch) that looked like this by the end of our training runs:

And got the following best performance RMSE and MSE

MSE: 182.96

RMSE: 13.53Task 2.5: Backtesting the betting model

Enough with the data modeling “academic side” of ML. It’s time to cross over to the dark side and start making some real money :)

I retrained the spread model with only data from 2017 - 2022. Then, I pulled some data on the betting lines and spreads for every game in the 2023 season. The way a spread bet works is there is a favorite and an underdog and you are betting whether the favorite team “covers” the spread. Hence, if the home team is the favorite and the spread is 6.0, you are betting that the home team wins by at least 6 points.

In theory, our model should predict the score difference between the home and away teams, so if we think the favorite will cover the spread, we should take the bet. Assuming that bets pay out $100 for every $110 you wager (in addition to getting back your wager), we can compute our return. Here’s the results I got for my model:

Bet: $8,100.00

Payout: $10,500.00

Profit: $2,400.00

MOIC: 1.30x

IRR: 244.63%Not too shabby!

One observation I did make as I was looking over the actual predictions was that the model biases toward picking the home team as the winner. It’s not egregious—it predicted 61.8% of games in the 2023 season would be home victories, compared to 57%, though I think this itself was an outlier year. We certainly wouldn’t want the model to learn to always guess the home team will win, so a good follow on step would be to try and curb this bias and bring the model performance more in line with the average number of home wins in a season.

Conclusion

I have a few ideas for how to improve the model and further this work:

Evaluate different modeling approaches, or even ensemble them. I didn’t look into RFs or SVMs. An ensemble approach might be even better, though some other repos I found explored this and only saw a marginal improvement.

Add additional features. The model as a whole does okay, but there is a lot more informative features we could add, such as weather, team stats, even starting lineup.

Explore transformer or RNN-based architectures. NFL data is inherently sequential. If a team is on a hot streak, maybe they’ve figured out a winning programme. If a team is on a losing streak, perhaps something is broken in the organization and the team is headed for a disastrous season. RNNs or LSTMs could be a great way to capture this time-dependency. It might be interesting to explore transformers as well, as the idea of self-attention helping the model focus on “results” from previous or relevant games is interesting. It would be helpful for a model to, for example, take into account transitive dependencies to bias the predictions (e.g. if team A beats team B and team B beats team C, shouldn’t team A be more likely to beat team C?). I’m already taking the opportunity to call the model/blog post covering this idea Next Foundational LLM.

Enhance the betting model with dynamic wagers. The betting backtest was somewhat of an afterthought for quantifying and benchmarking the effectiveness of the model in a more real sense. If we actually wanted to optimize the model for making money, we should adapt our betting algorithm, taking into account not just the predicted probability, but also the expected payout. For example, if we shouldn’t make a spread bet, should we make a moneyline bet instead? What about extending the model to handle over/unders? What about looking for parlay opportunities.

Although I don’t anticipate myself risking any real money any time soon, it has added an extra dimension of fun to my Sunday football watching to run the model and see which of my game predictions actually come true! For example, I accurately predicted the outcomes of Chiefs vs. Falcons, Bills vs. Jaguars, and Seahawks vs. Dolphins during week 3 of the 2024-2025 season. This should be an exciting season!